We are introducing GPT‑5, our best AI system yet. GPT‑5 is a significant leap in intelligence over all our previous models, featuring state-of-the-art performance across coding, math, writing, health, visual perception, and more. It is a unified system that knows when to respond quickly and when to think longer to provide expert-level responses. GPT‑5 is available to all users, with Plus subscribers getting more usage, and Pro subscribers getting access to GPT‑5 pro, a version with extended reasoning for even more comprehensive and accurate answers.

GPT‑5 is a unified system with a smart, efficient model that answers most questions, a deeper reasoning model (GPT‑5 thinking) for harder problems, and a real‑time router that quickly decides which to use based on conversation type, complexity, tool needs, and your explicit intent (for example, if you say “think hard about this” in the prompt). The router is continuously trained on real signals, including when users switch models, preference rates for responses, and measured correctness, improving over time. Once usage limits are reached, a mini version of each model handles remaining queries. In the near future, we plan to integrate these capabilities into a single model.

GPT‑5 not only outperforms previous models on benchmarks and answers questions more quickly, but—most importantly—is more useful for real-world queries. We’ve made significant advances in reducing hallucinations, improving instruction following, and minimizing sycophancy, while leveling up GPT‑5’s performance in three of ChatGPT’s most common uses: writing, coding, and health.

GPT‑5 is our strongest coding model to date. It shows particular improvements in complex front‑end generation and debugging larger repositories. It can often create beautiful and responsive websites, apps, and games with an eye for aesthetic sensibility in just one prompt, intuitively and tastefully turning ideas into reality. Early testers also noted its design choices, with a much better understanding of things like spacing, typography, and white space. See here for full details on what GPT‑5 unlocks for developers.

Here are some examples of what GPT‑5 has created with just one prompt:

Prompt: Create a single-page app in a single HTML file with the following requirements:

- Name: Jumping Ball Runner

- Goal: Jump over obstacles to survive as long as possible.

- Features: Increasing speed, high score tracking, retry button, and funny sounds for actions and events.

- The UI should be colorful, with parallax scrolling backgrounds.

- The characters should look cartoonish and be fun to watch.

- The game should be enjoyable for everyone.

GPT‑5 is our most capable writing collaborator yet, able to help you steer and translate rough ideas into compelling, resonant writing with literary depth and rhythm. It more reliably handles writing that involves structural ambiguity, such as sustaining unrhymed iambic pentameter or free verse that flows naturally, combining respect for form with expressive clarity. These improved writing capabilities mean that ChatGPT is better at helping you with everyday tasks like drafting and editing reports, emails, memos, and more. The writing styles of GPT‑5 and GPT‑4o can be compared in the table below.

GPT‑5 is our best model yet for health-related questions, empowering users to be informed about and advocate for their health. The model scores significantly higher than any previous model on HealthBench, an evaluation we published earlier this year based on realistic scenarios and physician-defined criteria. Compared to previous models, it acts more like an active thought partner, proactively flagging potential concerns and asking questions to give more helpful answers. The model also now provides more precise and reliable responses, adapting to the user’s context, knowledge level, and geography, enabling it to provide safer and more helpful responses in a wide range of scenarios. Importantly, ChatGPT does not replace a medical professional—think of it as a partner to help you understand results, ask the right questions in the time you have with providers, and weigh options as you make decisions.

You can see some of the ways GPT‑5 is better than our previous models across domains—richer, more detailed, and useful—in these examples:

GPT-4o

GPT-5

GPT‑5’s response lands the larger emotional arc with a stronger ending, clear imagery, and striking metaphors (“black flags of a country that no longer exists,” “Kyoto’s bell rolls evening down the hill”) that establish a vivid sense of culture and place. GPT‑4o’s version follows a more predictable structure and rhyme scheme, telling instead of showing (“she weeps and doesn’t tell”).

*We chose a response between 4o and OpenAI o3 based on whichever model performed better between the two for the given prompt.

GPT‑5 is much smarter across the board, as reflected by its performance on academic and human-evaluated benchmarks, particularly in math, coding, visual perception, and health. It sets a new state of the art across math (94.6% on AIME 2025 without tools), real-world coding (74.9% on SWE-bench Verified, 88% on Aider Polyglot), multimodal understanding (84.2% on MMMU), and health (46.2% on HealthBench Hard)—and those gains show up in everyday use. With GPT‑5 pro’s extended reasoning, the model also sets a new SOTA on GPQA, scoring 88.4% without tools.

*AIME results with tools should not be compared directly to the performance of models without tool access; they are an example of how effectively GPT‑5 leverages available tools.

All SWE-bench evaluation runs use a fixed subset of n=477 verified tasks which have been validated on our internal infrastructure.

GPT‑5 shows significant gains in benchmarks that test instruction following and agentic tool use, the kinds of capabilities that let it reliably carry out multi-step requests, coordinate across different tools, and adapt to changes in context. In practice, this means it’s better at handling complex, evolving tasks; GPT‑5 can follow your instructions more faithfully and get more of the work done end-to-end using the tools at its disposal.

The model excels across a range of multimodal benchmarks, spanning visual, video-based, spatial, and scientific reasoning. Stronger multimodal performance means ChatGPT can reason more accurately over images and other non-text inputs—whether that’s interpreting a chart, summarizing a photo of a presentation, or answering questions about a diagram.

GPT‑5 is also our best performing model on an internal benchmark measuring performance on complex, economically valuable knowledge work. When using reasoning, GPT‑5 is comparable to or better than experts in roughly half the cases, while outperforming o3 and ChatGPT Agent, across tasks spanning over 40 occupations including law, logistics, sales, and engineering.

Methodology for evaluations above: Results for GPT‑4o reflect the most recent version of the model in ChatGPT as of August 2025. All models are evaluated at high ‘reasoning effort’ settings. Reasoning effort can vary in ChatGPT, with high representing the upper bound of what a user might experience when using the model.

GPT‑5 gets more value out of less thinking time. In our evaluations, GPT‑5 (with thinking) performs better than OpenAI o3 with 50-80% less output tokens across capabilities, including visual reasoning, agentic coding, and graduate-level scientific problem solving.

GPT‑5 was trained on Microsoft Azure AI supercomputers.

GPT‑5 is significantly less likely to hallucinate than our previous models. With web search enabled on anonymized prompts representative of ChatGPT production traffic, GPT‑5’s responses are ~45% less likely to contain a factual error than GPT‑4o, and when thinking, GPT‑5’s responses are ~80% less likely to contain a factual error than OpenAI o3.

We’ve particularly invested in making our models more reliable when reasoning on complex, open-ended questions. Accordingly, we’ve added new evaluations to stress‑test open-ended factuality. We measured GPT‑5’s hallucination rate when thinking on open-ended fact-seeking prompts from two public factuality benchmarks: LongFact(opens in a new window) (concepts and objects) and FActScore(opens in a new window). Across all of these benchmarks, “GPT‑5 thinking” shows a sharp drop in hallucinations—about six times fewer than o3—marking a clear leap forward in producing consistently accurate long-form content. Implementation and grading details for our evaluations on these benchmarks can be found in the system card.

Alongside improved factuality, GPT‑5 (with thinking) more honestly communicates its actions and capabilities to the user—especially for tasks which are impossible, underspecified, or missing key tools. In order to achieve a high reward during training, reasoning models may learn to lie about successfully completing a task or be overly confident about an uncertain answer. For example, to test this, we removed all the images from the prompts of the multimodal benchmark CharXiv, and found that OpenAI o3 still gave confident answers about non-existent images 86.7% of the time, compared to just 9% for GPT‑5.

When reasoning, GPT‑5 more accurately recognizes when tasks can’t be completed and communicates its limits clearly. We evaluated deception rates on settings involving impossible coding tasks and missing multimodal assets, and found that GPT‑5 (with thinking) is less deceptive than o3 across the board. On a large set of conversations representative of real production ChatGPT traffic, we’ve reduced rates of deception from 4.8% for o3 to 2.1% of GPT‑5 reasoning responses. While this represents a meaningful improvement for users, more work remains to be done, and we’re continuing research into improving the factuality and honesty of our models. Further details can be found in the system card.

Before mitigation

After mitigation

GPT‑5 advances the frontier on safety. In the past, ChatGPT relied primarily on refusal-based safety training: based on the user’s prompt, the model should either comply or refuse. While this type of training works well for explicitly malicious prompts, it can struggle to handle situations where the user’s intent is unclear, or information could be used in benign or malicious ways. Refusal training is especially inflexible for dual-use domains such as virology, where a benign request can be safely completed at a high level, but might enable a bad actor if completed in detail.

For GPT‑5, we introduced a new form of safety-training — safe completions — which teaches the model to give the most helpful answer where possible while still staying within safety boundaries. Sometimes, that may mean partially answering a user’s question or only answering at a high level. If the model needs to refuse, GPT‑5 is trained to transparently tell you why it is refusing, as well as provide safe alternatives. In both controlled experiments and our production models, we find that this approach is more nuanced, enabling better navigation of dual-use questions, stronger robustness to ambiguous intent, and fewer unnecessary overrefusals. Read more about our new approach to safety-training, as well as full details on methodology, metrics, and results, in our safe completion paper.

Safety and helpfulness (given safe responses) across prompt intent types. GPT‑5 (with thinking) demonstrates both higher safety and greater helpfulness across all prompt intent types.

Overall, GPT‑5 is less effusively agreeable, uses fewer unnecessary emojis, and is more subtle and thoughtful in follow‑ups compared to GPT‑4o. It should feel less like “talking to AI” and more like chatting with a helpful friend with PhD‑level intelligence.

Earlier this year, we released an update to GPT‑4o that unintentionally made the model overly sycophantic, or excessively flattering or agreeable. We quickly rolled back the change and have since worked to understand and reduce this behavior by:

- Developing new evaluations to measure sycophancy levels

- Improving our training so the model is less sycophantic—for instance, adding examples that would normally lead to over-agreement, and then teaching it not to do that.

In targeted sycophancy evaluations using prompts specifically designed to elicit sycophantic responses, GPT‑5 meaningfully reduced sycophantic replies (from 14.5% to less than 6%). At times, reducing sycophancy can come with reductions in user satisfaction, but the improvements we made cut sycophancy by more than half while also delivering other measurable gains, so users continue to have high-quality, constructive conversations—in line with our goal to help people use ChatGPT well.

GPT‑5 is significantly better at instruction following, and we see a corresponding improvement in its ability to follow custom instructions.

We’re also launching a research preview of four new preset personalities for all ChatGPT users, made possible by the improvements on steerability. These personalities, available initially for text chat and coming later to Voice, let you set how ChatGPT interacts—whether concise and professional, thoughtful and supportive, or a bit sarcastic—without writing custom prompts. The four initial options, Cynic, Robot, Listener, and Nerd, are opt-in, adjustable anytime in settings, and designed to match your communication style.

All of these new personalities meet or exceed our bar on internal evals for reducing sycophancy.

We look forward to learning and iterating based on early feedback.

We decided to treat the “GPT‑5 thinking” model as High capability in the Biological and Chemical domain, and have implemented strong safeguards to sufficiently minimize the associated risks. We rigorously tested the model with our safety evaluations under our Preparedness Framework, completing 5,000 hours of red-teaming with partners like the CAISI and UK AISI.

Similar to our approach for ChatGPT Agent, while we do not have definitive evidence that this model could meaningfully help a novice to create severe biological harm–our defined threshold(opens in a new window) for High capability–we are taking a precautionary approach and are activating the required safeguards now in order to increase readiness for when such capabilities are available. As a result, “GPT‑5 thinking” has a robust safety stack with a multilayered defense system for biology: comprehensive threat modeling, training the model to not output harmful content through our new safe completions paradigm, always-on classifiers and reasoning monitors, and clear enforcement pipelines.

Read more about our robust safety approach for GPT‑5 in our system card.

For the most challenging, complex tasks, we are also releasing GPT‑5 pro, replacing OpenAI o3‑pro, a variant of GPT‑5 that thinks for ever longer, using scaled but efficient parallel test-time compute, to provide the highest quality and most comprehensive answers. GPT‑5 pro achieves the highest performance in the GPT‑5 family on several challenging intelligence benchmarks, including state-of-the-art performance on GPQA, which contains extremely difficult science questions.

In evaluations on over 1000 economically valuable, real-world reasoning prompts, external experts preferred GPT‑5 pro over "GPT‑5 thinking" 67.8% of the time. GPT‑5 pro made 22% fewer major errors and excelled in health, science, mathematics, and coding. Experts rated its responses as relevant, useful, and comprehensive.

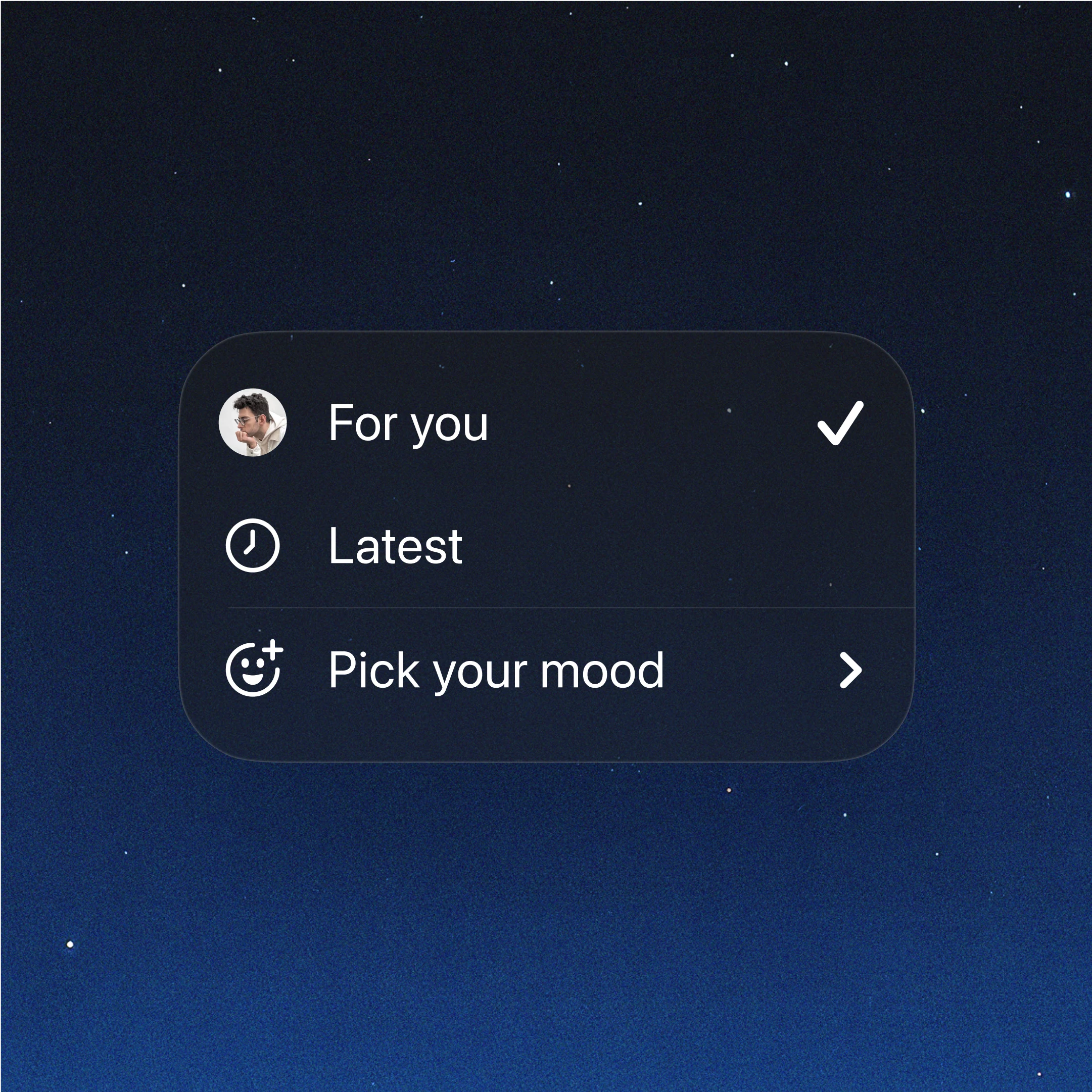

GPT‑5 is the new default in ChatGPT, replacing GPT‑4o, OpenAI o3, OpenAI o4-mini, GPT‑4.1, and GPT‑4.5 for signed-in users. Just open ChatGPT and type your question; GPT‑5 handles the rest, applying reasoning automatically when the response would benefit from it. Paid users can still select “GPT‑5 Thinking” from the model picker, or type something like ‘think hard about this’ in the prompt to ensure reasoning is used when generating a response.

GPT‑5 is starting to roll out today to all Plus, Pro, Team, and Free users, with access for Enterprise and Edu coming next week. Pro, Plus, and Team users can also start coding with GPT‑5 in the Codex CLI(opens in a new window) by signing in with ChatGPT.

As with GPT‑4o, the difference between free and paid access to GPT‑5 is usage volume. Pro subscribers get unlimited access to GPT‑5, and access to GPT‑5 Pro. Plus users can use it comfortably as their default model for everyday questions, with significantly higher usage than free users. Team, Enterprise, and Edu customers can also use GPT‑5 comfortably as their default model for everyday work, with generous limits that make it easy for entire organizations to rely on GPT‑5. For ChatGPT free-tier users, full reasoning capabilities may take a few days to fully roll out. Once free users reach their GPT‑5 usage limits, they will transition to GPT‑5 mini, a smaller, faster, and highly capable model.

Author

OpenAIFootnotes

*There is a small discrepancy with numbers reported in our previous blog post, as those were run on a former version of HLE.

**We find that the default grader in MultiChallenge (GPT-4o) frequently mis-scores model responses. We find that swapping the grader to a reasoning model, like o3-mini, improves accuracy on grading significantly on samples we’ve inspected.

***For MMMUPro, we averaged scores for standard and vision.

Contributors

Aaditya Singh, Adam Fry, Adam Perelman, Adam Tart, Adi Ganesh, Ahmed El-Kishky, Aidan McLaughlin, Aiden Low, AJ Ostrow, Akhila Ananthram, Akshay Nathan, Alan Luo, Alec Helyar, Aleksander Madry, Aleksandr Efremov, Aleksandra Spyra, Alex Baker-Whitcomb, Alex Beutel, Alex Karpenko, Alex Makelov, Alex Neitz, Alex Wei, Alexandra Barr, Alexandre Kirchmeyer, Alexey Ivanov, Alexi Christakis, Alistair Gillespie, Allison Tam, Ally Bennett, Alvin Wan, Alyssa Huang, Amy McDonald Sandjideh, Amy Yang, Ananya Kumar, Andre Saraiva, Andrea Vallone, Andrei Gheorghe, Andres Garcia Garcia, Andrew Braunstein, Andrew Liu, Andrew Schmidt, Andrey Mereskin, Andrey Mishchenko, Andy Applebaum, Andy Rogerson, Ann Rajan, Annie Wei, Anoop Kotha, Anubha Srivastava, Anushree Agrawal, Arun Vijayvergiya, Ashley Tyra, Ashvin Nair, Avi Nayak, Ben Eggers, Bessie Ji, Beth Hoover, Bill Chen, Blair Chen, Boaz Barak, Borys Minaiev, Botao Hao, Bowen Baker, Brad Lightcap, Brandon McKinzie, Brandon Wang, Brendan Quinn, Brian Fioca, Brian Hsu, Brian Yang, Brian Yu, Brian Zhang, Brittany Brenner, Callie Riggins Zetino, Cameron Raymond, Camillo Lugaresi, Carolina Paz, Cary Hudson, Cedric Whitney, Chak Li, Charles Chen, Charlotte Cole, Chelsea Voss, Chen Ding, Chen Shen, Chengdu Huang, Chris Colby, Chris Hallacy, Chris Koch, Chris Lu, Christina Kaplan, Christina Kim, CJ Minott-Henriques, Cliff Frey, Cody Yu, Coley Czarnecki, Colin Reid, Colin Wei, Cory Decareaux, Cristina Scheau, Cyril Zhang, Cyrus Forbes, Da Tang, Dakota Goldberg, Dan Roberts, Dana Palmie, Daniel Kappler, Daniel Levine, Daniel Wright, Dave Leo, David Lin, David Robinson, Declan Grabb, Derek Chen, Derek Lim, Derek Salama, Dibya Bhattacharjee, Dimitris Tsipras, Dinghua Li, Dingli Yu, DJ Strouse, Drew Williams, Dylan Hunn, Ed Bayes, Edwin Arbus, Ekin Akyurek, Elaine Ya Le, Elana Widmann, Eli Yani, Elizabeth Proehl, Enis Sert, Enoch Cheung, Eri Schwartz, Eric Han, Eric Jiang, Eric Mitchell, Eric Sigler, Eric Wallace, Erik Ritter, Erin Kavanaugh, Evan Mays, Evgenii Nikishin, Fangyuan Li, Felipe Petroski Such, Filipe de Avila Belbute Peres, Filippo Raso, Florent Bekerman, Foivos Tsimpourlas, Fotis Chantzis, Francis Song, Francis Zhang, Gaby Raila, Garrett McGrath, Gary Briggs, Gary Yang, Giambattista Parascandolo, Gildas Chabot, Grace Kim, Grace Zhao, Gregory Valiant, Guillaume Leclerc, Hadi Salman, Hanson Wang, Hao Sheng, Haoming Jiang, Haoyu Wang, Haozhun Jin, Harshit Sikchi, Heather Schmidt, Henry Aspegren, Honglin Chen, Huida Qiu, Hunter Lightman, Ian Covert, Ian Kivlichan, Ian Silber, Ian Sohl, Ibrahim Hammoud, Ignasi Clavera, Ikai Lan, Ilge Akkaya, Ilya Kostrikov, Irina Kofman, Isak Etinger, Ishaan Singal, Jackie Hehir, Jacob Huh, Jacqueline Pan, Jake Wilczynski, Jakub Pachocki, James Lee, James Quinn, Jamie Kiros, Janvi Kalra, Jasmyn Samaroo, Jason Wang, Jason Wolfe, Jay Chen, Jay Wang, Jean Harb, Jeffrey Han, Jeffrey Wang, Jennifer Zhao, Jeremy Chen, Jerene Yang, Jerry Tworek, Jesse Chand, Jessica Landon, Jessica Liang, Ji Lin, Jiancheng Liu, Jianfeng Wang, Jie Tang, Jihan Yin, Joanne Jang, Joel Morris, Joey Flynn, Johannes Ferstad, Johannes Heidecke, John Fishbein, John Hallman, Jonah Grant, Jonathan Chien, Jonathan Gordon, Jongsoo Park, Jordan Liss, Jos Kraaijeveld, Joseph Guay, Joseph Mo, Josh Lawson, Josh McGrath, Joshua Vendrow, Joy Jiao, Julian Lee, Julie Steele, Julie Wang, Junhua Mao, Kai Chen, Kai Hayashi, Kai Xiao, Kamyar Salahi, Kan Wu, Karan Sekhri, Karan Sharma, Karan Singhal, Karen Li, Kenny Nguyen, Keren Gu-Lemberg, Kevin King, Kevin Liu, Kevin Stone, Kevin Yu, Kristen Ying, Kristian Georgiev, Kristie Lim, Kushal Tirumala, Kyle Miller, Lama Ahmad, Larry Lv, Laura Clare, Laurance Fauconnet, Lauren Itow, Lauren Yang, Laurentia Romaniuk, Leah Anise, Lee Byron, Leher Pathak, Leon Maksin, Leyan Lo, Leyton Ho, Li Jing, Liang Wu, Liang Xiong, Lien Mamitsuka, Lin Yang, Lindsay McCallum, Lindsey Held, Liz Bourgeois, Logan Engstrom, Lorenz Kuhn, Louis Feuvrier, Lu Zhang, Lucas Switzer, Lukas Kondraciuk, Lukasz Kaiser, Manas Joglekar, Mandeep Singh, Mandip Shah, Manuka Stratta, Marcus Williams, Mark Chen, Mark Sun, Marselus Cayton, Martin Li, Marvin Zhang, Marwan Aljubeh, Matt Nichols, Matthew Haines, Max Schwarzer, Mayank Gupta, Meghan Shah, Melody Huang, Meng Dong, Mengqing Wang, Mia Glaese, Micah Carroll, Michael Lampe, Michael Malek, Michael Sharman, Michael Zhang, Michele Wang, Michelle Pokrass, Mihai Florian, Mikhail Pavlov, Miles Wang, Ming Chen, Mingxuan Wang, Minnia Feng, Mo Bavarian, Molly Lin, Moose Abdool, Mostafa Rohaninejad, Nacho Soto, Natalie Staudacher, Natan LaFontaine, Nathan Marwell, Nelson Liu, Nick Preston, Nick Turley, Nicklas Ansman, Nicole Blades, Nikil Pancha, Nikita Mikhaylin, Niko Felix, Nikunj Handa, Nishant Rai, Nitish Keskar, Noam Brown, Ofir Nachum, Oleg Boiko, Oleg Murk, Olivia Watkins, Oona Gleeson, Pamela Mishkin, Patryk Lesiewicz, Paul Baltescu, Pavel Belov, Peter Zhokhov, Philip Pronin, Phillip Guo, Phoebe Thacker, Qi Liu, Qiming Yuan, Qinghua Liu, Rachel Dias, Rachel Puckett, Rahul Arora, Ravi Teja Mullapudi, Raz Gaon, Reah Miyara, Rennie Song, Rishabh Aggarwal, RJ Marsan, Robel Yemiru, Robert Xiong, Rohan Kshirsagar, Rohan Nuttall, Roman Tsiupa, Ronen Eldan, Rose Wang, Roshan James, Roy Ziv, Rui Shu, Ruslan Nigmatullin, Saachi Jain, Saam Talaie, Sam Altman, Sam Arnesen, Sam Toizer, Sam Toyer, Samuel Miserendino, Sandhini Agarwal, Sarah Yoo, Savannah Heon, Scott Ethersmith, Sean Grove, Sean Taylor, Sebastien Bubeck, Sever Banesiu, Shaokyi Amdo, Shengjia Zhao, Sherwin Wu, Shibani Santurkar, Shiyu Zhao, Shraman Ray Chaudhuri, Shreyas Krishnaswamy, Shuaiqi (Tony) Xia, Shuyang Cheng, Shyamal Anadkat, Simón Posada Fishman, Simon Tobin, Siyuan Fu, Somay Jain, Song Mei, Sonya Egoian, Spencer Kim, Spug Golden, SQ Mah, Steph Lin, Stephen Imm, Steve Sharpe, Steve Yadlowsky, Sulman Choudhry, Sungwon Eum, Suvansh Sanjeev, Tabarak Khan, Tal Stramer, Tao Wang, Tao Xin, Tarun Gogineni, Taya Christianson, Ted Sanders, Tejal Patwardhan, Thomas Degry, Thomas Shadwell, Tianfu Fu, Tianshi Gao, Timur Garipov, Tina Sriskandarajah, Toki Sherbakov, Tomer Kaftan, Tomo Hiratsuka, Tongzhou Wang, Tony Song, Tony Zhao, Troy Peterson, Val Kharitonov, Victoria Chernova, Vineet Kosaraju, Vishal Kuo, Vitchyr Pong, Vivek Verma, Vlad Petrov, Wanning Jiang, Weixing Zhang, Wenda Zhou, Wenlei Xie, Wenting Zhan, Wes McCabe, Will DePue, Will Ellsworth, Wulfie Bain, Wyatt Thompson, Xiangning Chen, Xiangyu Qi, Xin Xiang, Xinwei Shi, Yann Dubois, Yaodong Yu, Yara Khakbaz, Yifan Wu, Yilei Qian, Yin Tat Lee, Yinbo Chen, Yizhen Zhang, Yizhong Xiong, Yonglong Tian, Young Cha, Yu Bai, Yu Yang, Yuan Yuan, Yuanzhi Li, Yufeng Zhang, Yuguang Yang, Yujia Jin, Yun Jiang, Yunyun Wang, Yushi Wang, Yutian Liu, Zach Stubenvoll, Zehao Dou, Zheng Wu, Zhigang Wang