When ChatGPT launched in 2022, Intercom(opens in a new window) didn’t just watch the headlines—they mobilized. Within hours of GPT‑3.5's release, the customer service software company began experimenting, and just four months later launched Fin, their AI Agent that now resolves millions of customer queries each month.

That early momentum wasn’t an accident. As LLMs leapt forward, Intercom recognized that AI would reshape customer experience. Leadership acted quickly, spinning up a cross-functional task force, canceling non-AI projects, and committing $100 million to replatform the business around AI.

That decision sparked company-wide changes: reorganized product teams, a new AI-first helpdesk strategy, and a platform built to support Fin in handling high volumes and complex customer queries.

Below are three lessons from Intercom’s journey that any team—no matter where you’re starting—can put to work right now.

“AI-first has to be built in; you can’t bolt it on.”

Intercom tests models early, often, and learns deeply from their work.

The team began experimenting with generative models early, and their hands-on experience helped them map model limitations and spot opportunities. When GPT‑4 became available in early 2023, they were ready. Within four months, they launched Fin—and haven’t slowed down since.

“We were able to leverage GPT‑3.5 to have fluid conversations with glimpses of magic, but it wasn’t yet reliable enough to trust with our customers,” says Jordan Neill, SVP of Engineering. “Because we’d done the work, when GPT‑4 arrived, we knew it was ready, and we shipped Fin.”

That same fluency helped Intercom design Fin Tasks, a system that automates complex workflows like refunds and technical support. While the team initially planned for a reasoning model based stack, their evaluations showed GPT‑4.1 could handle the job on its own—with high reliability and lower latency.

Today, GPT‑4.1 powers a growing share of Intercom’s AI usage, including key logic inside Fin Tasks. The team also discovered that adding chain-of-thought prompting to non-reasoning queries closed performance gaps.

Intercom’s takeaway: the better you know your models, the faster you can adapt as the state of the art evolves.

In Intercom’s evaluations, GPT‑4.1 showed highest reliability in completing tasks while delivering a 20% cost reduction compared to GPT‑4o. Completeness numbers were averaged across 5 independent runs (using Pass@k); a result is only counted as 'complete' if it is successful in all 5 runs, to reduce variance.

To move fast, you have to measure what works—and why.

Intercom’s ability to adopt new models, modalities, and architectures quickly is rooted in their rigorous evaluation process. Every new OpenAI model—whether used for Fin Voice, powered by the Realtime API, or for Fin Tasks, powered by GPT‑4.1—is put through structured offline tests and live A/B trials to assess for instruction following, tool call accuracy, and overall coherence before deployment.

For example, the team benchmarks models against transcripts of actual support interactions, evaluating how well they handle multi-step instructions like refunds, maintain Fin’s brand voice, and execute function calls reliably. These results inform live A/B tests that compare resolution rates and customer satisfaction across models like GPT‑4 and GPT‑4.1.

This approach helped Intercom migrate from GPT‑4 to GPT‑4.1 in just days. After confirming improvements in instruction handling and function execution, they rolled out GPT‑4.1 across Fin Tasks and saw immediate gains in both performance and user satisfaction.

“When GPT‑4.1 dropped, we had eval results within 48 hours and a rollout plan right after,” says Pedro Tabacof, Principal Machine Learning Scientist at Intercom. “We immediately saw that GPT‑4.1 had a good mix of intelligence and latency for our customers’ needs.”

For Fin Voice, the same evaluation process helped Intercom validate new voice model snapshots and pinpoint improvements in latency, function execution, and script adherence: all essential to delivering human-quality phone support.

Intercom expanded their evals to capture the extra dimension that voice brings to interactions. They systematically assess Fin Voice for factors like personality, tone, interruption handling, and background noise to ensure high-quality customer experiences.

Intercom built for change from day one, designing an architecture flexible enough to evolve alongside the models it depends on.

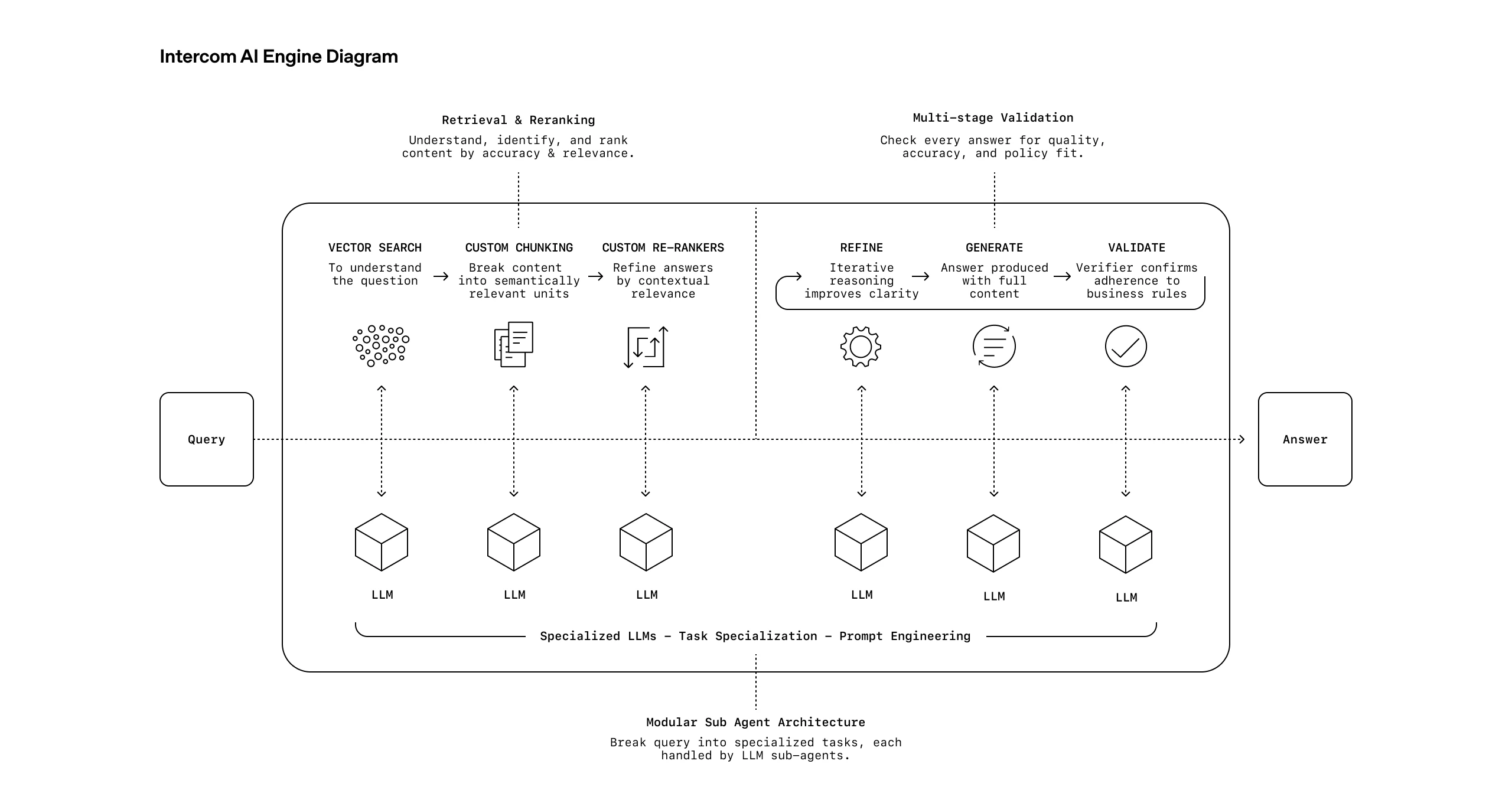

Fin’s system is modular by design, supporting multiple modalities like chat, email, and voice each with different tradeoffs for latency and complexity. The architecture allows Intercom to route queries to the best model for the job and swap models without reengineering the underlying system.

That flexibility is deliberate, and constantly evolving. Fin’s architecture is now on its third major iteration, with the next one already in development. As models improve, the team adds complexity where needed to unlock new capabilities and simplifies where possible.

This adaptability proved critical with Fin Tasks. Initially, the team assumed they’d need reasoning based models to support Fin Tasks—which enables Fin to resolve complex customer queries and executive multi-step processes like issuing refunds, making account changes, or technical troubleshooting.

But in testing, GPT‑4.1’s instruction-following capabilities outperformed expectations delivering the same reliability at lower latency and cost.

“Honestly, I don’t think people talk about GPT‑4.1 enough,” says Pratik Bothra, Principal Machine Learning Engineer at Intercom. “We were genuinely surprised by the latency and cost profile. It lets us pivot our architecture and remove a lot of complexity.”

Fin AI Engine™

The team is just getting started. Powered by advanced models and built on a modular, model-agnostic architecture, Intercom is expanding beyond customer support to power workflows across the business, delivering faster resolutions and better customer experiences:

- Support teams: Resolving the majority of inbound queries across chat, email, voice, and more with Fin AI Agent

- Ops teams: Automating complex workflows like refunds, account changes, and subscription updates with Fin Tasks

- Product teams: Using Intercom’s MCP Server, AI tools like ChatGPT can access customer conversations, tickets, and user data - helping teams across the business spot bugs, shape roadmaps, refine messaging, and prepare for QBRs.

Intercom built a scalable AI platform by staying rigorous on evaluation, grounded in performance, and flexible in design—redefining support and offering lessons for any company building with AI.