Our work to make ChatGPT as helpful as possible is constant and ongoing. We’ve seen people turn to it in the most difficult of moments. That’s why we continue to improve how our models recognize and respond to signs of mental and emotional distress, guided by expert input.

This work has already been underway, but we want to proactively preview our plans for the next 120 days, so you won’t need to wait for launches to see where we’re headed. The work will continue well beyond this period of time, but we’re making a focused effort to launch as many of these improvements as possible this year.

Last week, we shared four focus areas when it comes to helping people when they need it most:

- Expanding interventions to more people in crisis

- Making it even easier to reach emergency services and get help from experts

- Enabling connections to trusted contacts

- Strengthening protections for teens.

Some of this work will move very quickly, while other parts will take more time.

Today, we’re sharing more on how we’re partnering with experts to guide our work, leveraging our reasoning models for sensitive moments, as well as details on one of our focus areas: Strengthening protections for teens.

AI is new and evolving, and we want to make sure our progress is guided by deep expertise on well-being and mental health. Together, our Expert Council on Well-Being and AI and our Global Physician Network provide both the depth of specialized medical expertise and the breadth of perspective needed to inform our approach. We’ll share more about these efforts during our 120-day initiative.

Expert Council on Well-Being and AI

Earlier this year, we began convening a council of experts in youth development, mental health, and human-computer interaction. The council’s role is to shape a clear, evidence-based vision for how AI can support people’s well-being and help them thrive.

Their input will help us define and measure well-being, set priorities, and design future safeguards—such as future iterations of parental controls—with the latest research in mind. While the council will advise on our product, research, and policy decisions, OpenAI remains accountable for the choices we make.

Global Physician Network

This council will work in tandem with our Global Physician Network—a broader pool of more than 250 physicians who have practiced in 60 countries—that we have worked with over the past year on efforts like our health bench evaluations, which are designed to better measure capabilities of AI systems for health.

Of this broader pool, more than 90 physicians across 30 countries—including psychiatrists, pediatricians, and general practitioners—have already contributed to our research on how our models should behave in mental health contexts. Their input directly informs our safety research, model training, and other interventions, helping us to quickly engage the right specialists when needed.

We are adding even more clinicians and researchers to our network, including those with deep expertise in areas like eating disorders, substance use, and adolescent health.

Our reasoning models—like GPT‑5-thinking and o3—are built to spend more time thinking for longer and reasoning through context before answering. Trained with a method we call deliberative alignment, our testing shows(opens in a new window) that reasoning models more consistently follow and apply safety guidelines and are more resistant to adversarial prompts.

We recently introduced a real-time router that can choose between efficient chat models and reasoning models based on the conversation context. We’ll soon begin to route some sensitive conversations—like when our system detects signs of acute distress—to a reasoning model, like GPT‑5-thinking, so it can provide more helpful and beneficial responses, regardless of which model a person first selected. We’ll iterate on this approach thoughtfully.

Many young people are already using AI. They are among the first “AI natives,” growing up with these tools as part of daily life, much like earlier generations did with the internet or smartphones. That creates real opportunities for support, learning, and creativity, but it also means families and teens may need support in setting healthy guidelines that fit a teen’s unique stage of development.

Parental Controls

Earlier this year, we began building more ways for families to use ChatGPT together and decide what works best in their home. Within the next month, parents will be able to:

- Link their account with their teen’s account (minimum age of 13) through a simple email invitation.

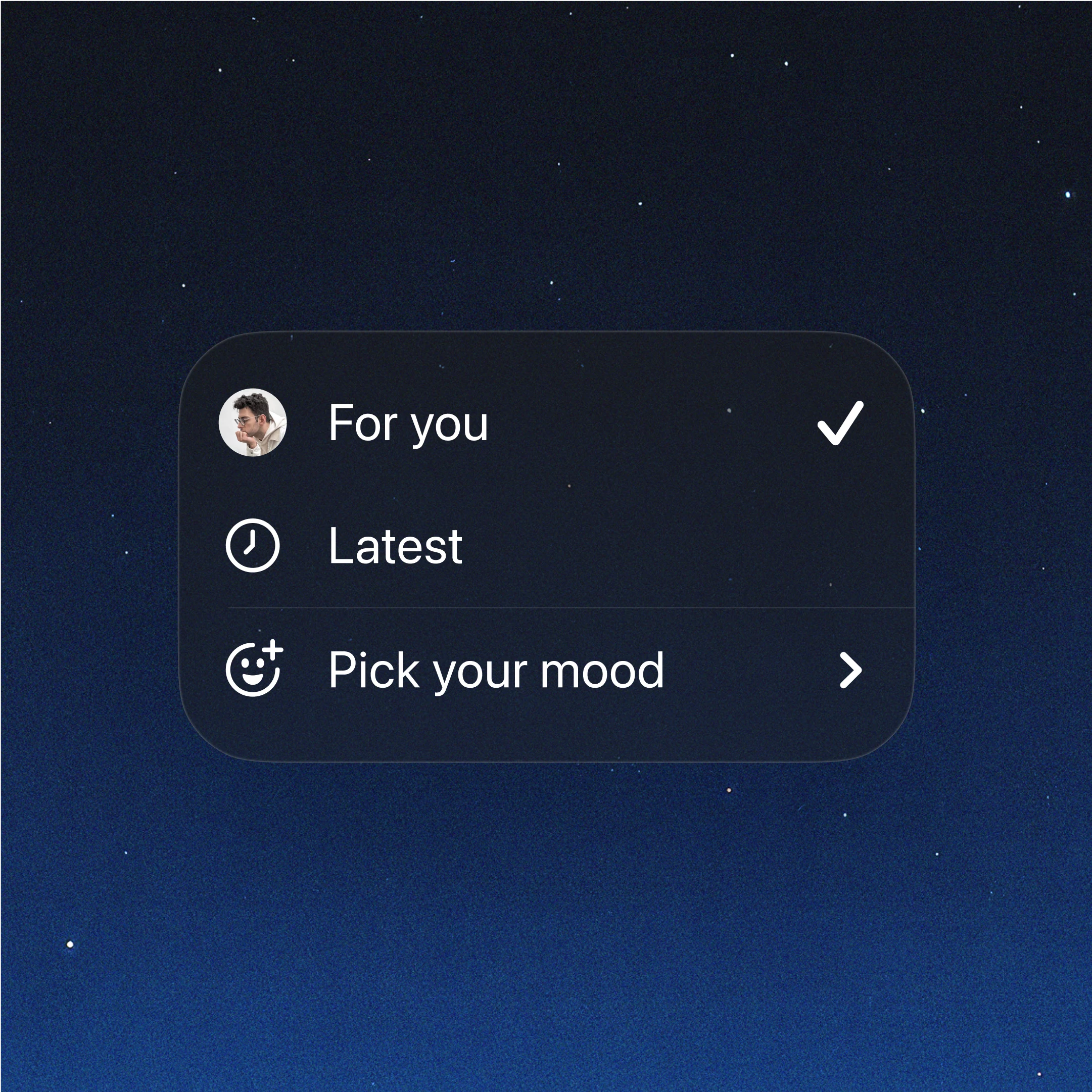

- Control how ChatGPT responds to their teen with age-appropriate model behavior rules, which are on by default.

- Manage which features to disable, including memory and chat history.

- Receive notifications when the system detects their teen is in a moment of acute distress. Expert input will guide this feature to support trust between parents and teens.

These controls add to features we have rolled out for all users including in-app reminders during long sessions to encourage breaks.

These steps are only the beginning. We will continue learning and strengthening our approach, guided by experts, with the goal of making ChatGPT as helpful as possible. We look forward to sharing our progress over the coming 120 days.